Mark Zuckerberg, who must have the balls to have supported Trump, tries to drown his sorrow in lamas farm. So not idiotic lamas that hunt as well as your mother -in -law but rather digital lamas, namely the famous Meta LLM, which could well revolutionize your personal projects with their new approach!

He has therefore just announced the provision of LLAMA 4their new multi-modal model (it can understand the text and the images) which has been brought over almost 30 billion tokens (or more Llama 3) and has a context of 10 million tokens. Basically, it is like taking it the equivalent of 8,000 pages of text, the complete Bible + the entire trilogy of the Lord of the Rings + the user manual of your microwave, and it will remember any time.

Available in 2 versions, this is the first model to use the MOE architecture (Mixture of Expert). It is a mode of operation that divides the tasks into a problem, which allows you to activate only the necessary parts of the model on each specific task.

The scout version is the one I just tested right now. It is :

- 17 billion active parameters with 16 experts (MOE)

- 109 billion parameters in total

- He holds on a single NVIDIA H100 GPU (with Int4 quantification)

- Has a context window of 10 million tokens

- And is very oriented efficiency and optimized performance

The other model, Maverick is even more balèze but intended to compete squarely models like GPT 4O or Gemini 2.0 Flash.

- 17 billion active parameters with 128 experts

- 400 billion parameters in total

- He also holds on a single NVIDIA H100 GPU

- And as I said, it has performance comparable to high -end models

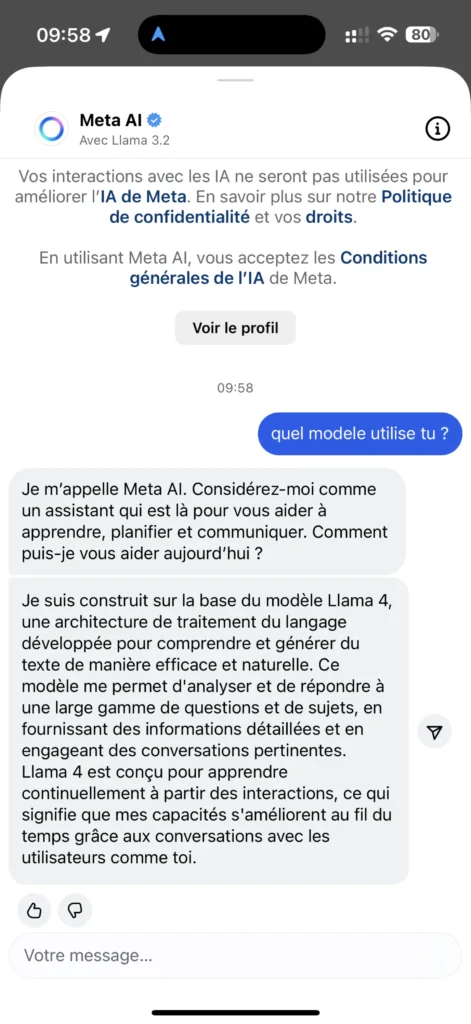

These models are available for download at Meta and Huggingface If you have a powerful machine enough to try it, but you can also test them via WhatsApp, Messenger, Instagram Direct Messenger or directly on the Meta.ai. And it’s free!

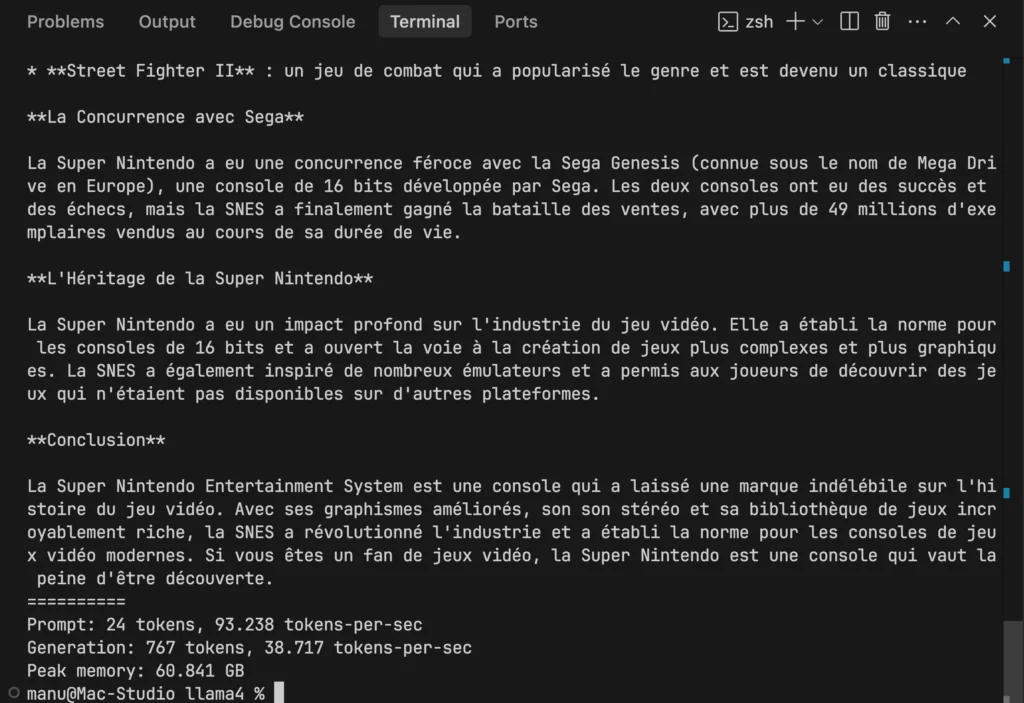

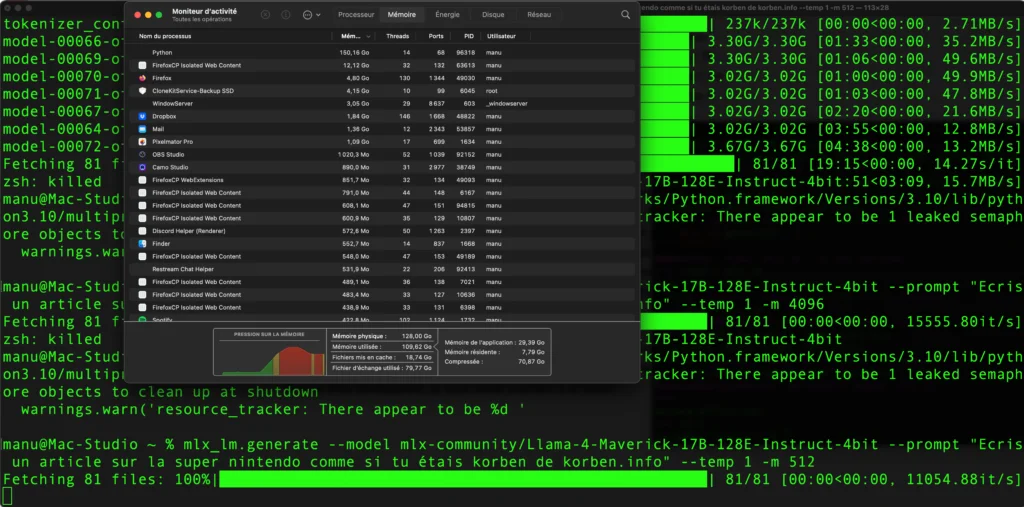

For my part, on my Mac Studio M4, I managed to run Scout, at the speed of 39 tokens / seconds. I’m happy, it’s pretty fast, and I will be able to use this model in my Dev de Conquest de Conquest de Conquest! To give you an idea, I was able to generate a complete answer of 569 words to a question on the history of the Super Nintendo in less than 20 seconds. And that is on consumer equipment, not on a supercomputer.

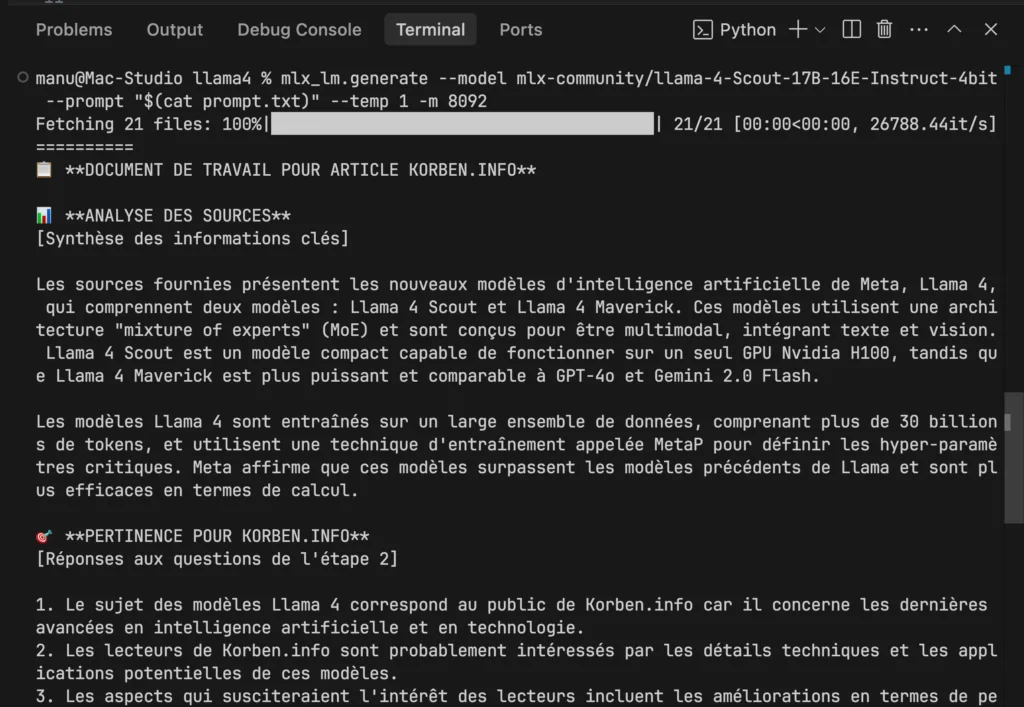

If you want to test this, you will have to install MLX-LM with pip and launch this order:

mlx_lm.generate --model mlx-community/llama-4-Scout-17B-16E-Instruct-4bit --prompt "Combien fait 3+2 ?" --temp 1 -m 4096

I tried Maverick too but in vain (it was predictable & mldr;)

What is really interesting with these new models is this thing of mixture of experts (MOE) because it makes it possible to drastically reduce the calculations necessary for an answer, it therefore reduces costs, it allows you to have a lower latency and especially in the future, it will allow Meta to create larger models (smarter what & mldr;) with the same resources. And that’s beautiful!

With such a context window, you could have it analyzed the entire source code of a complex project, process several scientific documents at the same time, or even summarize all your emails from the last 6 months in a single request. No more overpriced APIs and data that leave we don’t know where. With Scout on your Mac (or a PC with what needs in the belly), you keep all your sensitive data at home or in business while having performance that competes with cloud solutions.

Besides, they soon planned LLAMA 4 Behemothwhich will please the Satanics, and which will have 288 billion active parameters for 16 experts. Or nearly 2,000 billion parameters in total! It’s crazy! This model is even used to teach other Llama 4 models thanks to distillation techniques. On paper, he would therefore surpass GPT-4.5, Claude Sonnet 3.7 and Gemini 2.0 Pro (Benchmark Stem).

Llama 4 is therefore typically the kind of model that will allow you to write, code or make an analysis of images and documents 100% locally, as long as you have the appropriate hardware. On the other hand, Meta has set up a restrictive license on her model, which means that it is presented as open source but it is not at all. Another subject that is also debated is that the training data of these models would have contained hacked works (books & mldr;). To see also if it is politically neutral or if Llama 4 Trump support ^^.

In short, it is a good model anyway because it allows access to a powerful / intelligent LLM while sparing material and energy resources. If you are a developer, I really invite you to test it and tell me in comments what you think.

Source link

Subscribe to our email newsletter to get the latest posts delivered right to your email.

Comments