Are you tired of paying a fortune for chatgpt just so that you generate the code you could have written yourself?

And at the same time, it’s still cool to divide your Dev time by two or three with an AI assistant. But what would be even cooler is to have one as powerful as commercial solutions, but entirely free and above all that respects your private life!

So if you are a developer and you find yourself juggling between Stack overflow, Github and the official doc for each function you write, this article will help you!

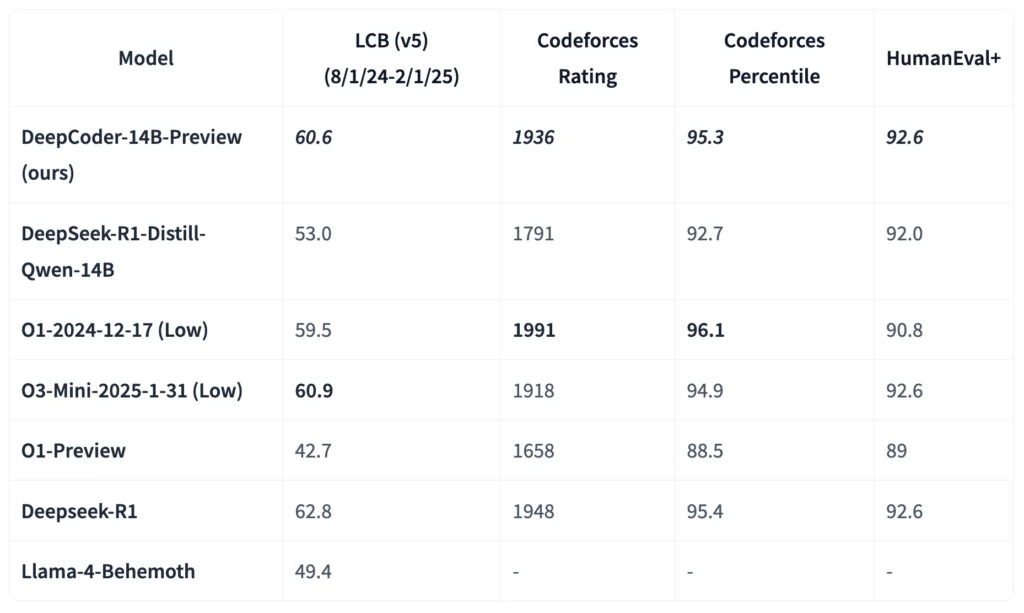

Developed by Together Ai And Agenta,, DEEPCODER-14B is part of this new wave of medium -sized models that compete with juggernauts with hundreds of billions of parameters. And the results are stunning since on Livecodebench (LCB), it reached a score of 65.4, only 3.2% under the score of O3-Mini which however requires 5 times more calculation resources.

Here are his specs:

- Only 14 billion parameters (vs hundreds of billions for GPT-4)

- It works on a server with average range GPUs

- Faster inference time, less latency

- And deployment costs divided by 10 or more

This model is, according to its designers, particularly effective for tasks such as refactoring, generation of unit tests, and performance optimization & MLDR;. Domains where his specific training training gives him an advantage compared to general models.

And above all, that means that an independent developer can now run a professional level coding assistant on his own machine, without selling a kidney to pay APIs or fear that his owner code will end up in the training datase of the next commercial model.

In addition to being efficient, it is completely transparent because the team shared not only the model, but also the entire training process and unlike math where quality problems and solutions swarm, leading an AI only on code is not simple.

How to properly reward the model without cheating by memorizing solutions? Or how to manage the long reasoning sequences necessary to solve complex problems?

The team behind Deepcoder resolved these challenges with several key innovations such as:

1. A surgical data curation:

They have set up a pipeline which rigorously filters 24,000 programming problems to guarantee validity, complexity and absence of duplication. It is this quality of the data that forms the solid Deepcoder foundation.

2. A reward function without frills:

No partial rewards or complex metrics & mldr; The model receives a positive signal only if its code passes all the unit tests in a limited time. This brutal but effective approach forces the model to really understand and solve problems, rather than looking for shortcuts.

3. GRPO+ (Relative group Policy Optimization):

An improved version of the algorithm that made Deepseek-R1 successful, with changes for increased stability during long training sessions. As a result, the model continues to improve where others stagnate.

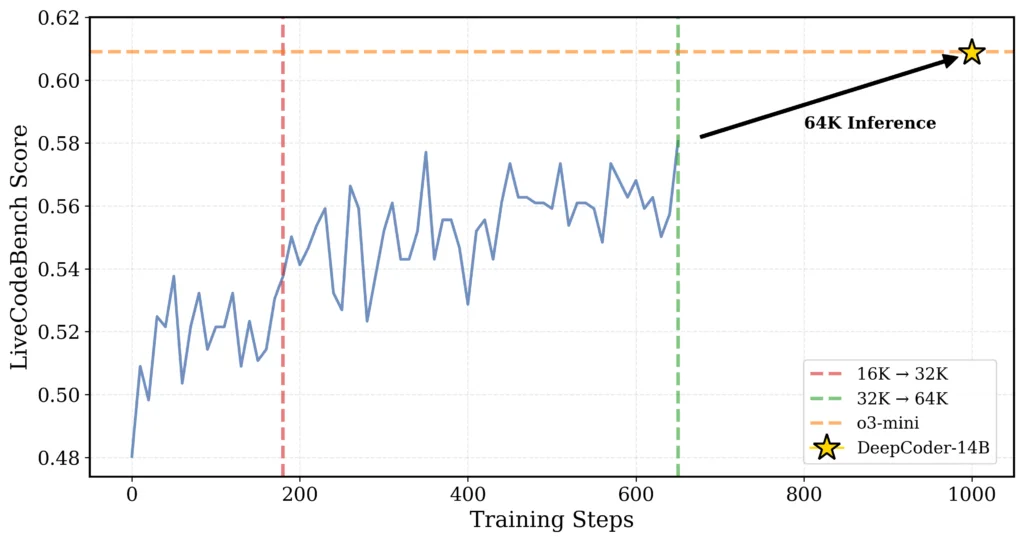

4. Progressive contextual extension:

And there, it is like driving an athlete first on short sprints, then gradually over longer distances, which ultimately allows him to run a marathon while keeping the speed of a sprinter. Concretely, they first led to the model on short sequences, then gradually increased the size of the context from 16K to 32k tokens. And icing on the cake, the final model can manage up to 64k tokens, so what to deal with really complex problems.

The most impressive technique is the “one-off pipeline”, which has accelerated training up to 2x by intelligently reorganizing the sampling and updating stages of the model. Without this optimization, training would have taken 5 weeks instead of 2.5 out of 32 H100 GPU.

And for the deep learning nerds: yes, all the code of these optimizations is available on Github. It’s Christmas before the time friends !!!

Here, we knew Llama, Mistral, Deepseek & Mldr; But Deepcoder-14b pushes the opening of the code even further because here is what is really shared in open source:

- The complete model and its weights (on Hugging Face))

- All 24,000 filtered training problems

- The training code and complete logs (even errors!)

- System optimizations like Verl-Pipeline

All this under very permissive license, which means that you can use it, modify it, and even integrate it into commercial projects, without paying a penny. The impact is therefore massive for different players in the ecosystem. For them researchersit is unprecedented access to an advanced RL (learning) model and its complete process. In short, a gold mine to understand and improve AI techniques.

Now for developers that we are, it is above all a professional assistant pro, free, which can turn locally without data leakage and for companiesit is also a viable alternative to expensive APIs, with the possibility of fine-tuner the model on their own code base. For example, for a team of 10 developers, the transition to Deepcoder-14B can represent a savings from € 1,200 to € 2400 per year compared to paid API solutions, while offering total control over data.

So, now, how to put this little gem between your trembling hands of excitement ???

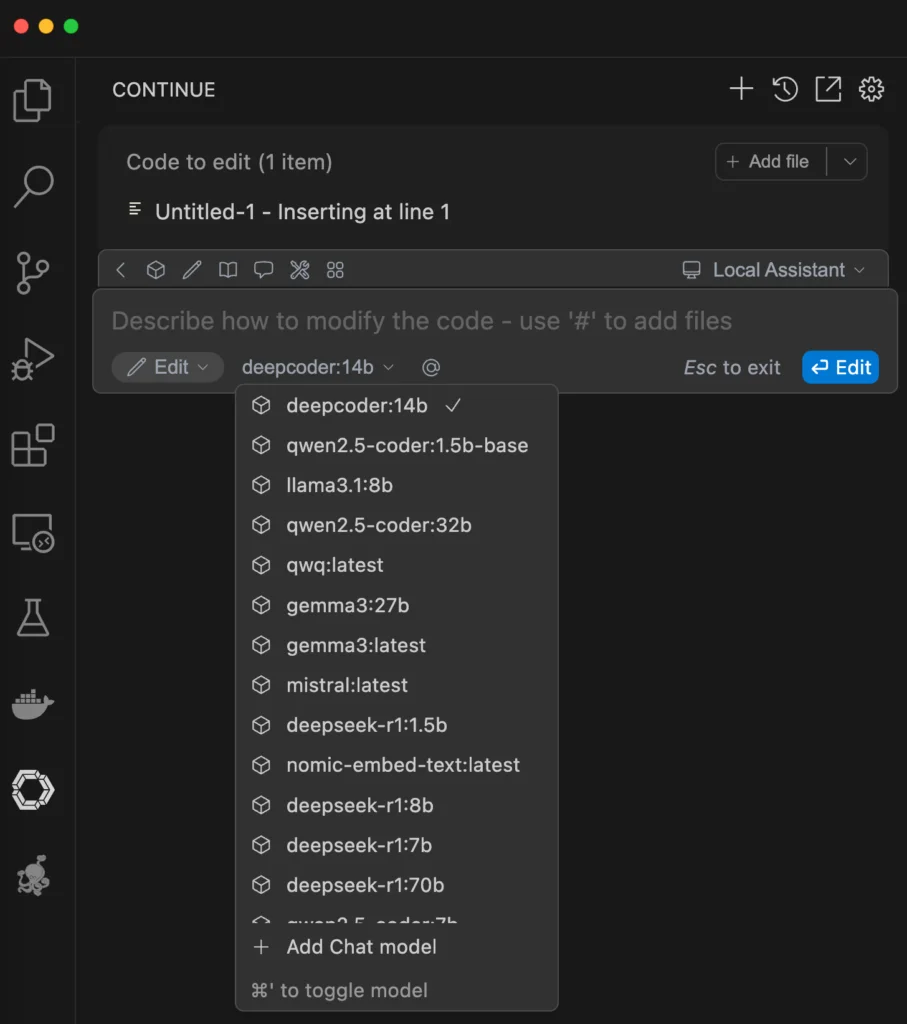

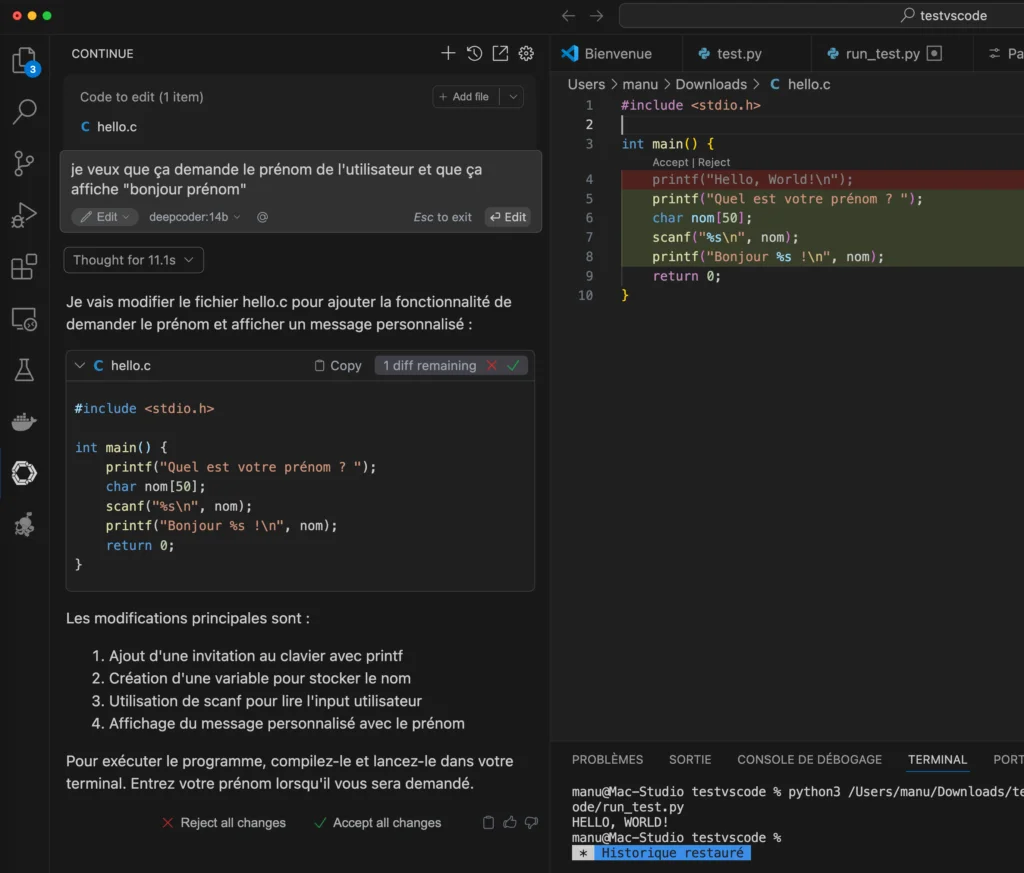

Well, one of the best ways to integrate Deepcoder-14b into VS code is to use the extension Continuouswhich allows you to easily use local models for code assistance.

Make sure you have:

- A graphics card with at least 24GB of VRAM (ideally an NVIDIA RTX 3090 or superior) or a good big Mac M3 / M4.

- 16GB of RAM minimum system (32GB recommended)

- At least 10GB of available disk space for the model

Then, you install this extension + Ollama, then via a terminal, you charge Deepcoder like this in Ollama:

Then in Visual Studio Code or one of its derivatives, you choose as an Ollama cat model in Autodetect. And in the list, you should see all the models you have installed, including Deepcoder 14B.

And there you go, the vibe Coding as they say & mldr;

Of course, Deepcoder also has its limits & mldr; No multimodal capacities (such as code analysis), knowledge limited to training data, and more difficulties on certain very complex problems but honestly, for 100% free and local, it is already exceptional.

By combining top performances, a reasonable size and a completely open source approach, Deepcoder-14b thus opens the way to a new era where cutting edge AI is no longer reserved for tech & mldr giants; And for the developers you are, this is the perfect opportunity to regain control of their AI tools without compromising the quality of their code or the confidentiality of their projects.

Source link

Subscribe to our email newsletter to get the latest posts delivered right to your email.

Comments